When maintaining a large and open project, usually around 10k-200k lines of code, it is suggested that you add an architecture document. This document discusses the high-level design and structure of a project, which does help developers in understanding the codebase and functionality. However, this website, bashbreach.com, is nowhere near 10k-200k lines of code. But I still thought understanding a website’s architecture would be useful. You can read more about ARCHITECTURE.md here.

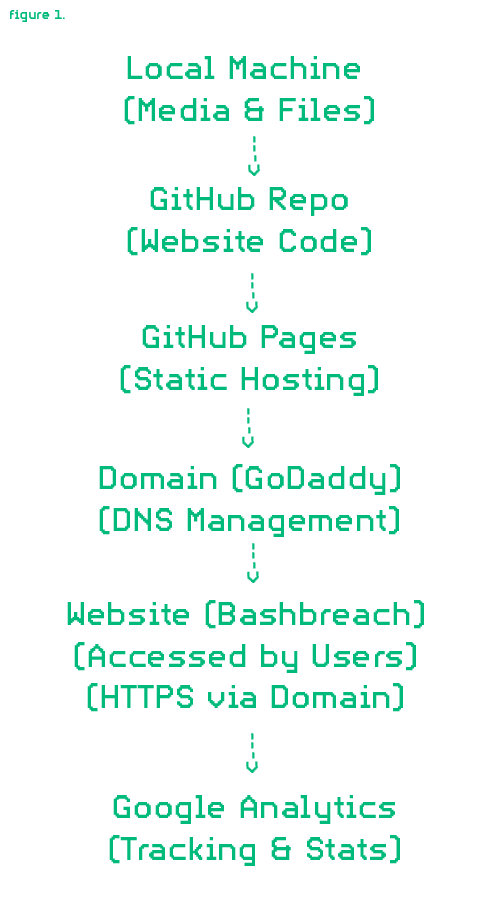

This website is built and designed through my own custom HTML, CSS, JavaScript, and other web-dev languages. No ready-to-use templates or third-party website builders are used. Bashbreach is statically hosted through GitHub pages, so builds & Deployments are performed via the traditional deploy-from-a-branch method, rather than via GitHub Actions. Future enhancements may involve using GitHub Actions to automate builds and optimize workflow though. DNS for domain name management is done with GoDaddy. So far, this website is meant to be lightweight, fast, and free of unnecessary dependencies, making it easy to maintain and scale. That might change later on though. Such as right now media images, like the entry logo’s or the TLDR News image, are hosted on my local machine. Once more images/files are used on this website, I will move on to using web services for hosting media and content (which bashbreach will then pull from) such as AWS or Cloudflare. Regarding tracking and analytics, the website does use Google Analytics, just so I can see the number of users going on the site, and which pages are popular.

Local Machine:

The Website’s content are stored locally on my machine, including media like logos, images, and the actual HTML, CSS, and JavaScript files.

I can then push these files into GitHub, to statically update the website for all users to see.

GitHub Pages:

GitHub Pages serves the static website directly from the repository.

When updates are pushed to GitHub, the GitHub Pages service automatically reflects those changes on the live site. This is done through the branch deploy process, meaning you deploy directly from a specific GitHub branch (e.g., main).

Later on, as the site becomes bigger, switching to GitHub Action would be the better choice. Quicker and faster.

GoDaddy (DNS Management):

The website's IP address and DNS is configured and managed on GoDaddy. This makes sure that the domain name, bashbreach.com, points to the GitHub repo the site is hosted on.

Since the domain name is configured, it is "bashbreach.com", instead of "mnhmomo.github.io"

User Access (HTTPS & Website):

Users access the website, and the DNS configuration in GoDaddy ensures the request reaches GitHub Pages.

HTTPS: Since you want a secure connection, browsers typically use HTTPS, ensuring that the data between the user's browser and the website is encrypted. GitHub Pages supports HTTPS.

Google Analytics:

Google Analytics is integrated into the website. Once users visit the site, basic activity can be seen, such as how many active users have visited the site and which pages are popular.

This provides insights into the website's performance, including user behavior and page popularity.

The /robots.txt file allows search engines to index most of the site while restricting administrative and private directories. It explicitly allows .html pages for proper indexing and includes a reference to the sitemap.xml file (https://bashbreach.com/sitemap.xml) to help search engines efficiently crawl the site.

A robots.txt file is a text file that instructs automated web bots on how to crawl and/or index a website. Web teams use them to provide information about what site directories should or should not be crawled, how quickly content should be accessed, and which bots are welcome on the site.

So far, that is the architecture of the website. Bashbreach is still small so there are no heavy dependencies yet, but I'm sure that will change as more updates are made. Once that happens, the architecture will change.